Ying Chen 陈莹

M.S., Xiamen University

M.S., Xiamen University

I am a third-year Master’s student in Computer Science at Xiamen University, where I conduct research in medical artificial intelligence under the supervision of Prof.Rongshan Yu at the Biomedical AI Laboratory. Concurrently, I hold a research internship position at Shanghai Artificial Intelligence Laboratory, under the mentorship of Dr.Junjun He on Generalist Medical Artificial Intelligence. My research focuses on how AI can transform healthcare, with particular interests in 1) Computational Pathology, 2) Multi-omics, and 3) Multimodal Large Language Models. These experiences have reinforced my commitment to pursuing a Ph.D. to further contribute to the development of clinically reliable AI systems. I am actively seeking Ph.D. opportunities for Fall 2026 to continue my work in this area.

Education

-

Xiamen University

M.S. in Computer Science and Technology Sep. 2023 - Jul. 2026

-

South China Normal University

B.S. in Artificial Intelligence Sep. 2019 - Jul. 2023

Honors & Awards

- Second Prize of CUMCM 2021

- Second Prize of MathorCup Mathematical Modeling Challenge, 2021

- Second Prize of Teddy Cup Data Analysis Vocational Skills Competition 2021

- Scholarship of South China Normal University 2019/2020/2021/2022

Experience

-

Shanghai AI Lab

Intern (Supervisor is Junjun He) May 2024 - Now

Service

- Conference Reviewer of CVPR, ICCV

- Journal Reviewer of TCSVT, BSCP, JDIM

News

Selected Publications

(view all )

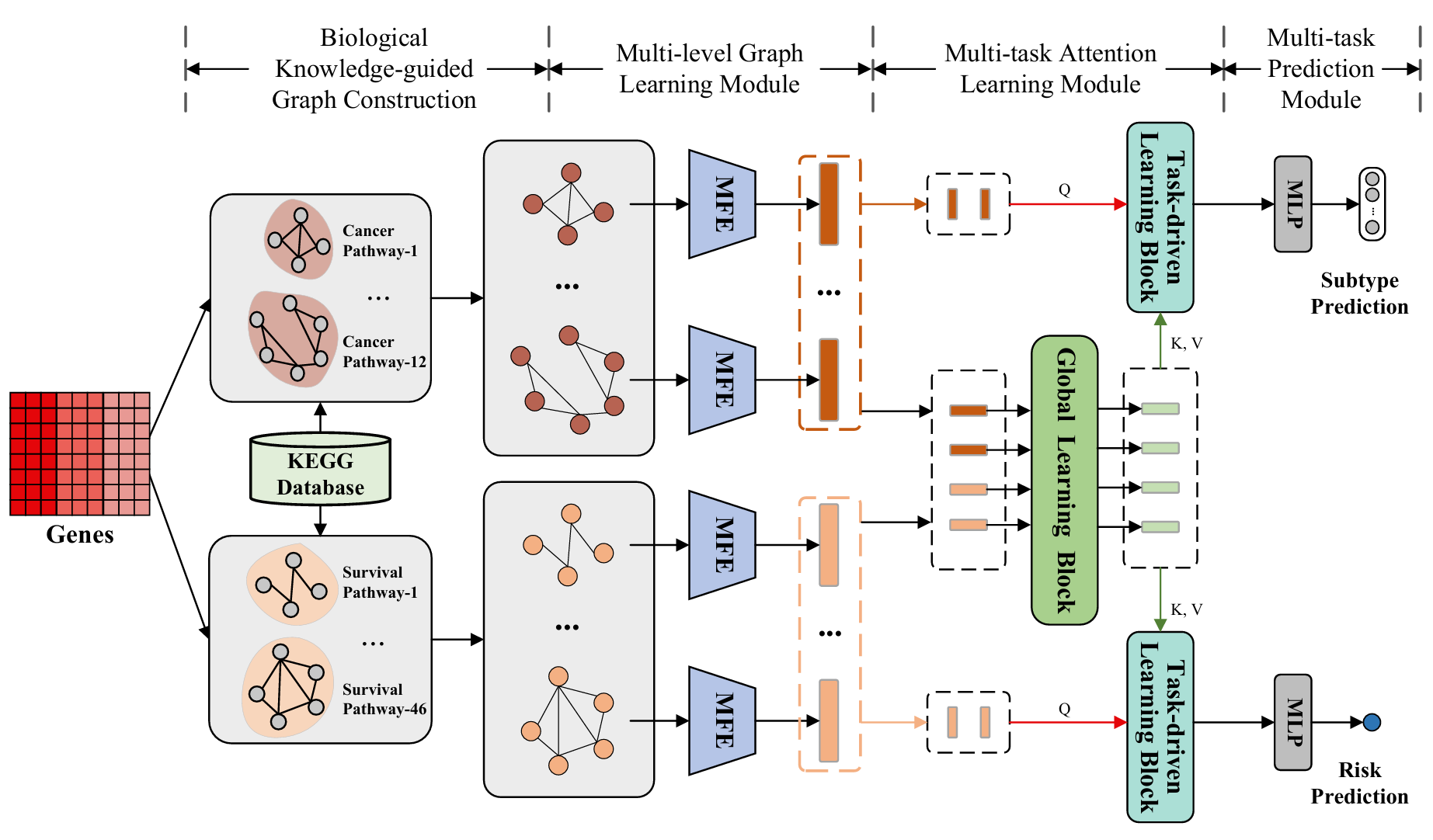

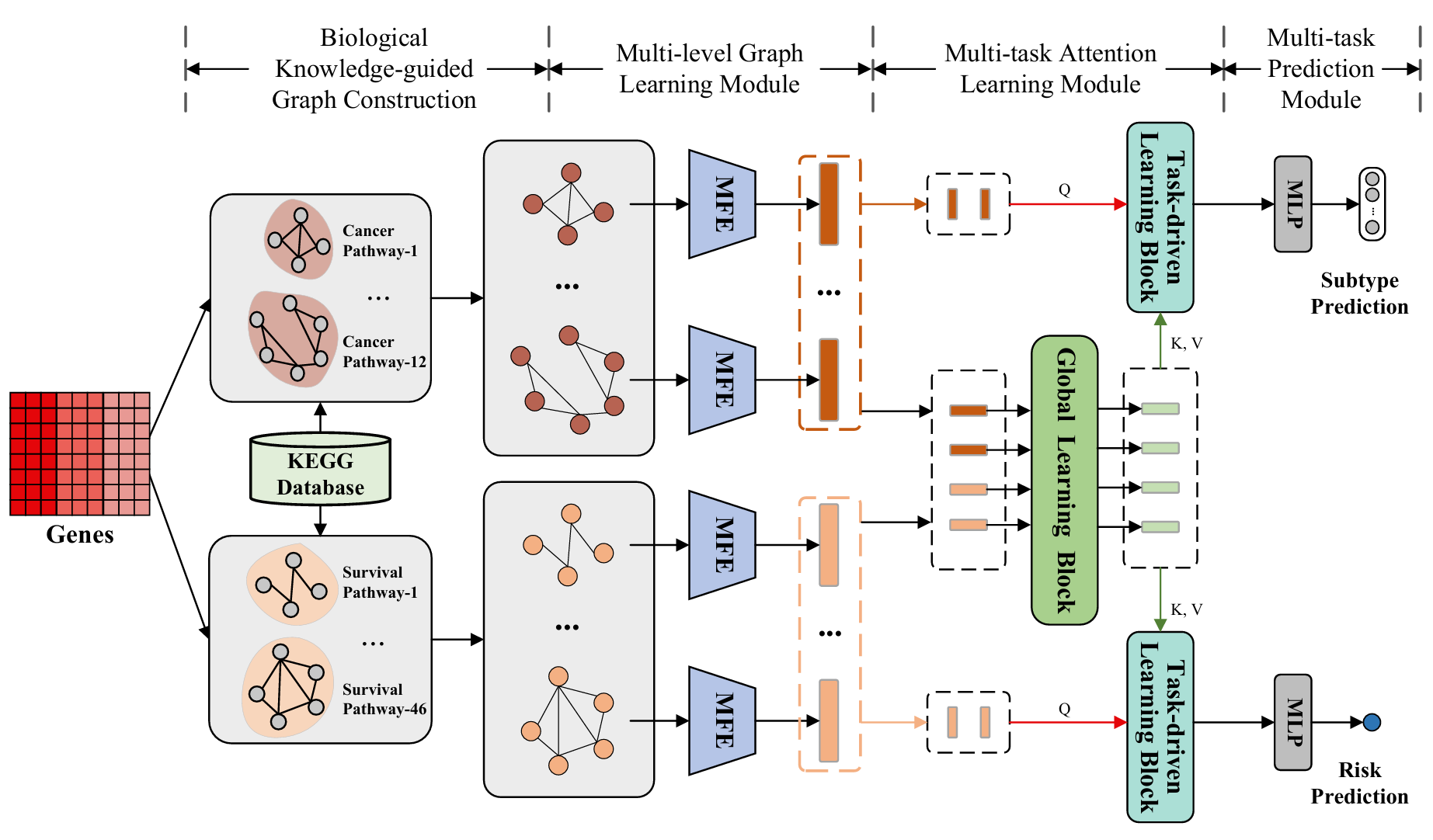

BioMTAN: A Biological Knowledge-guided Multi-task Attention Network for Co-enhanced Cancer Diagnosis and Prognosis

Ying Chen*, Jiajing Xie*, Yuxiang Lin, Yuhang Song, Wenxian Yang, Rongshan Yu†(* co-first author; † corresponding author)

IEEE Journal of Biomedical and Health Informatics 2025 Journal

Most existing approaches guided by biological pathways ignore the intrinsic link between diagnostic and prognostic tasks in cancer. We propose BioMTAN, a novel multi-task learning framework designed for simultaneous prediction of molecular subtypes and survival risk.

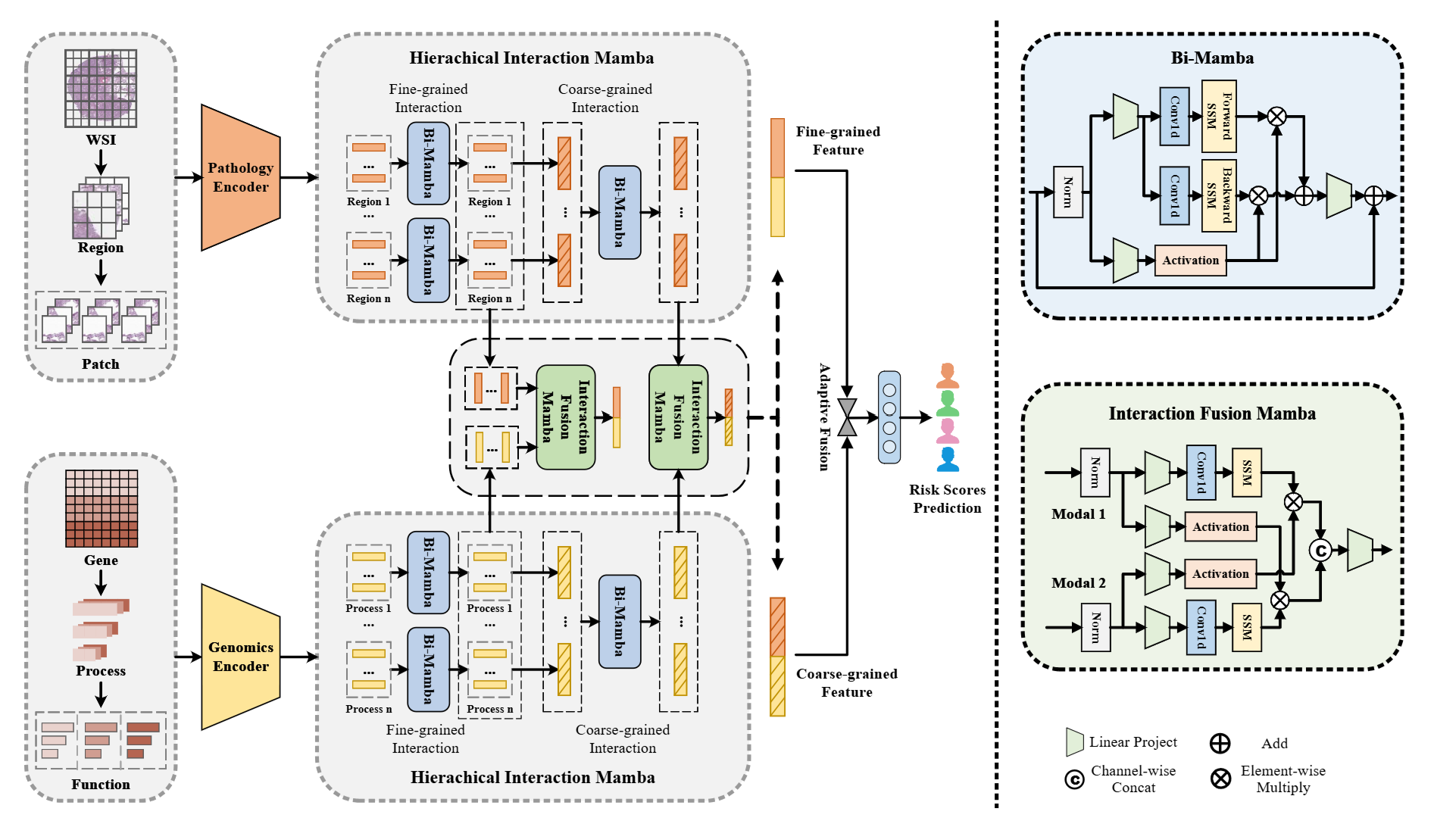

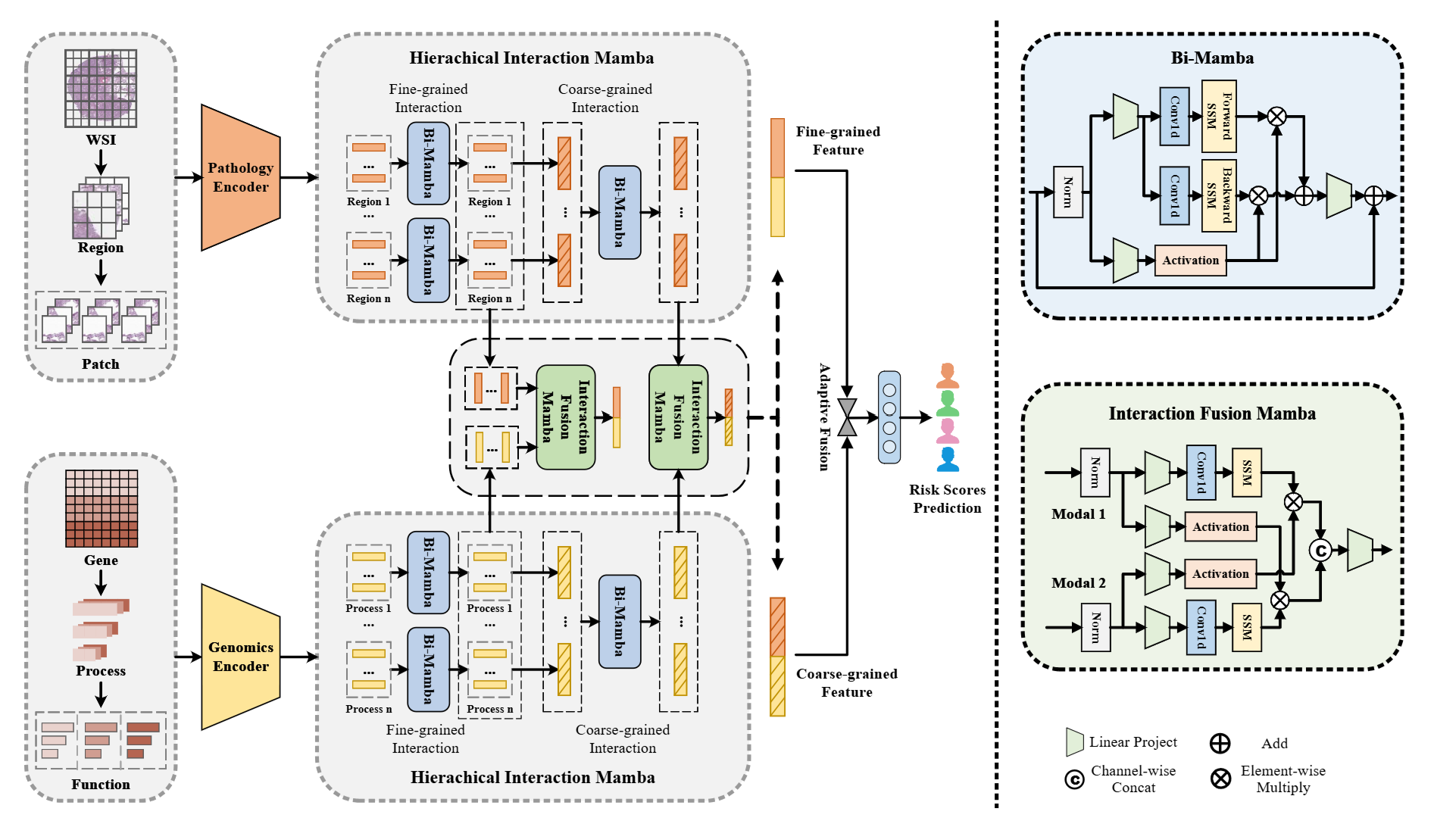

Survmamba: State space model with multi-grained multi-modal interaction for survival prediction

Ying Chen, Jiajing Xie, Yuxiang Lin, Yuhang Song, Wenxian Yang, Rongshan Yu†(† corresponding author)

BIBM 2025 ConferencePoster

In this study, we propose Mamba with multi-grained multi-modal interaction (SurvMamba) for survival prediction. Comprehensive evaluations on five TCGA datasets demonstrate that SurvMamba outperforms other existing methods in terms of performance and computational cost.

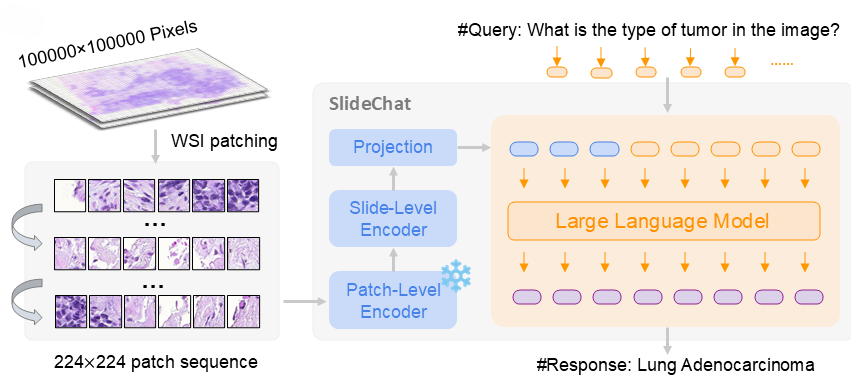

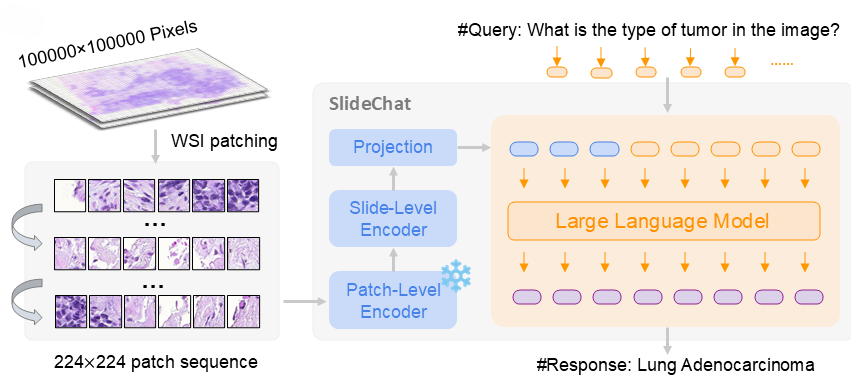

SlideChat: A large vision-language assistant for whole-slide pathology image understanding

Ying Chen*, Guoan Wang*, Yuanfeng Ji*†, Yanjun Li, Jin Ye, Tianbin Li, Ming Hu, Rongshan Yu, Yu Qiao, Junjun He†(* co-first author; † corresponding author)

CVPR 2025 ConferencePoster

We present SlideChat, the first vision-language assistant capable of understanding gigapixel whole-slide images, exhibiting excellent multimodal conversational capability and response complex instruction across diverse pathology scenarios.

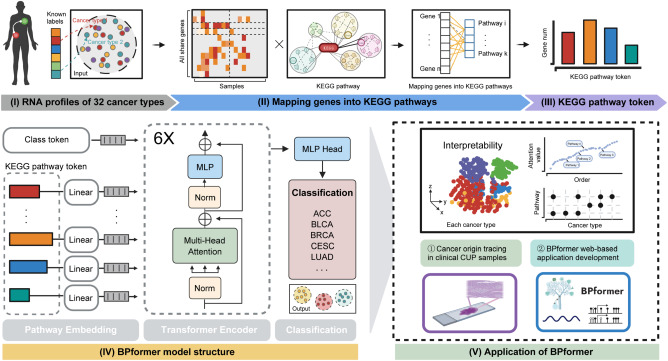

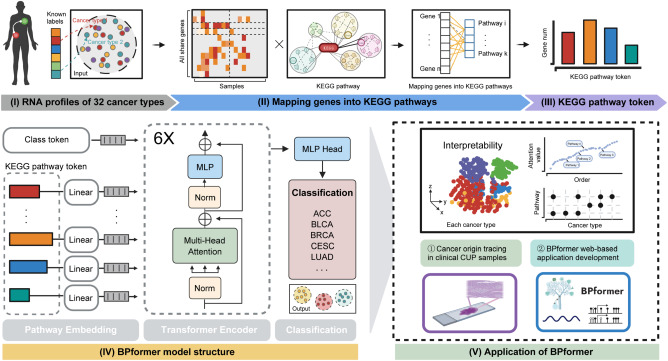

Tracing unknown tumor origins with a biological-pathway-based transformer model

Jiajing Xie*, Ying Chen*, Shijie Luo*, Wenxian Yang, Yuxiang Lin, Liansheng Wang, Xin Ding†, Mengsha Tong†, Rongshan Yu†(* co-first author; † corresponding author)

Cell Reports Methods 2024 Journal

Cancer of unknown primary (CUP) represents metastatic cancer where the primary site remains unidentified despite standard diagnostic procedures. To determine the tumor origin in such cases, we developed BPformer, a deep learning method integrating the transformer model with prior knowledge of biological pathways.

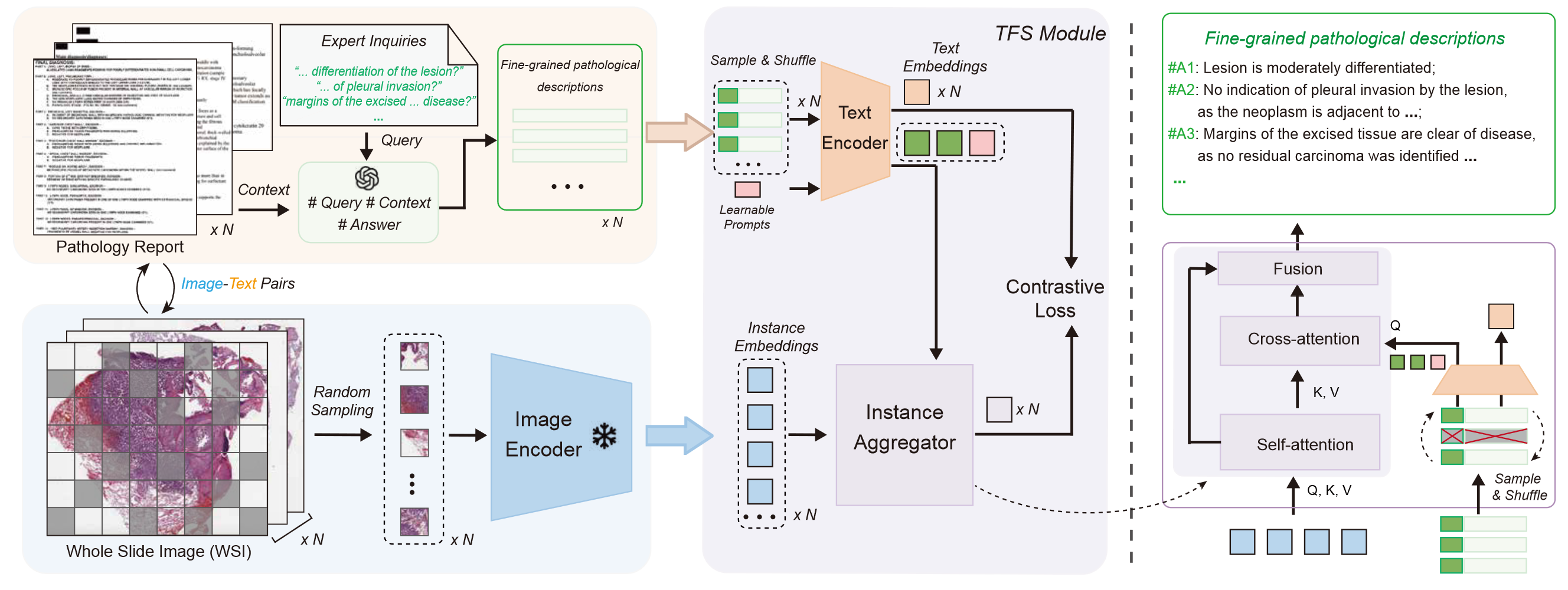

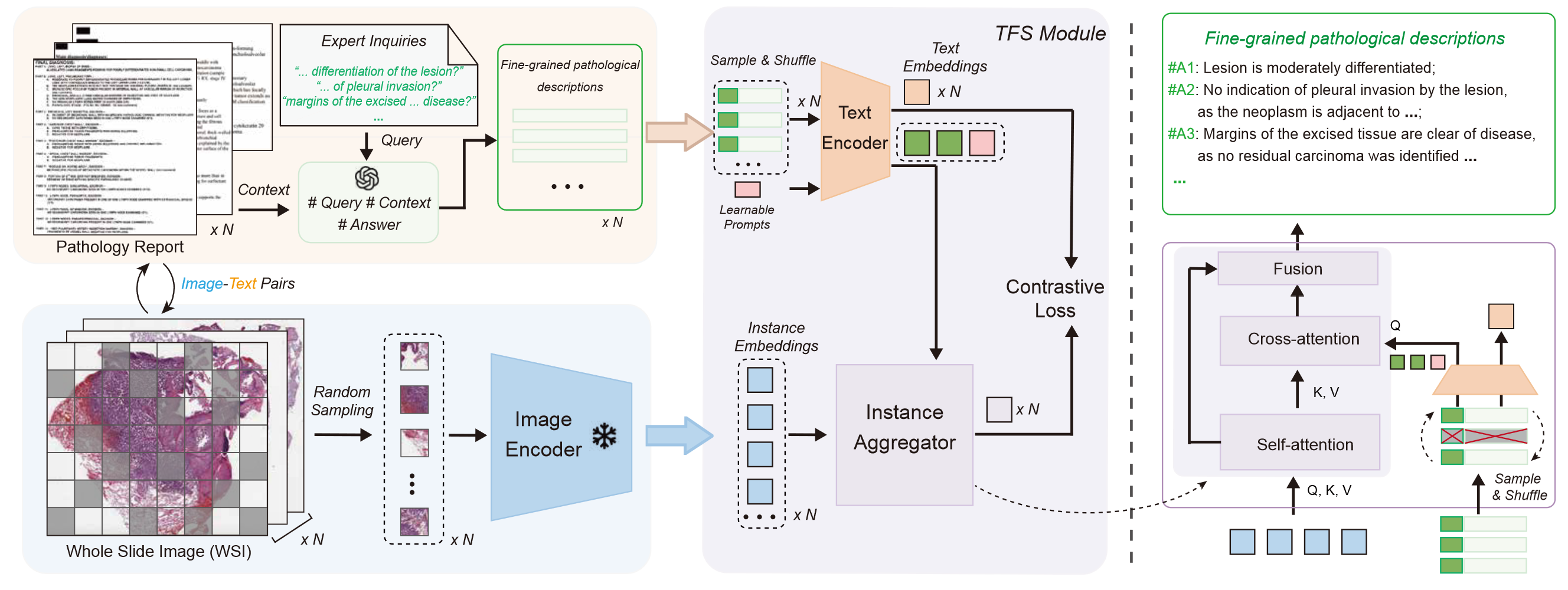

Generalizable whole slide image classification with fine-grained visual-semantic interaction

Hao Li, Ying Chen, Yifei Chen, Rongshan Yu†, Wenxian Yang, Liansheng Wang†, Bowen Ding, Yuchen Han†(† corresponding author)

CVPR 2024 ConferencePoster

In this paper we propose a novel "Fine-grained Visual-Semantic Interaction" (FiVE) framework for WSI classification. It is designed to enhance the model's generalizability by leveraging the interaction between localized visual patterns and fine-grained pathological semantics.

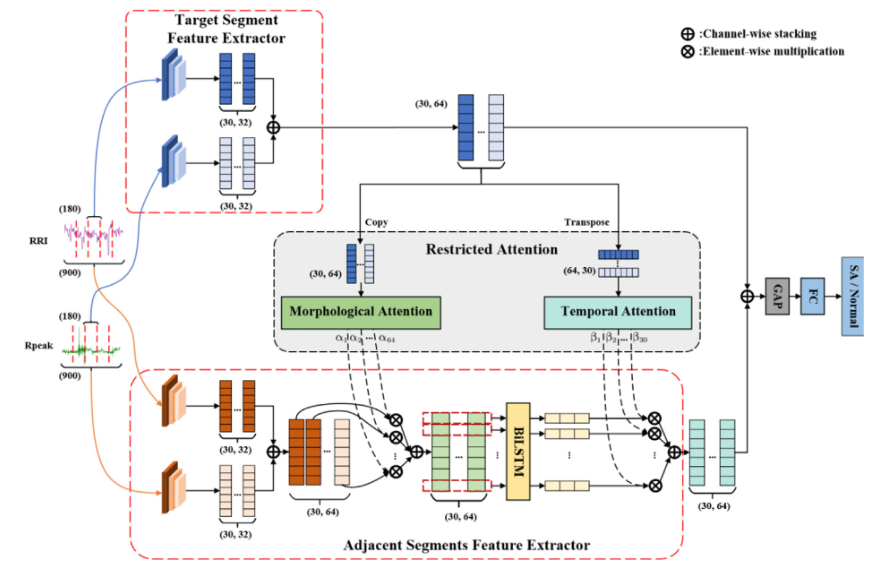

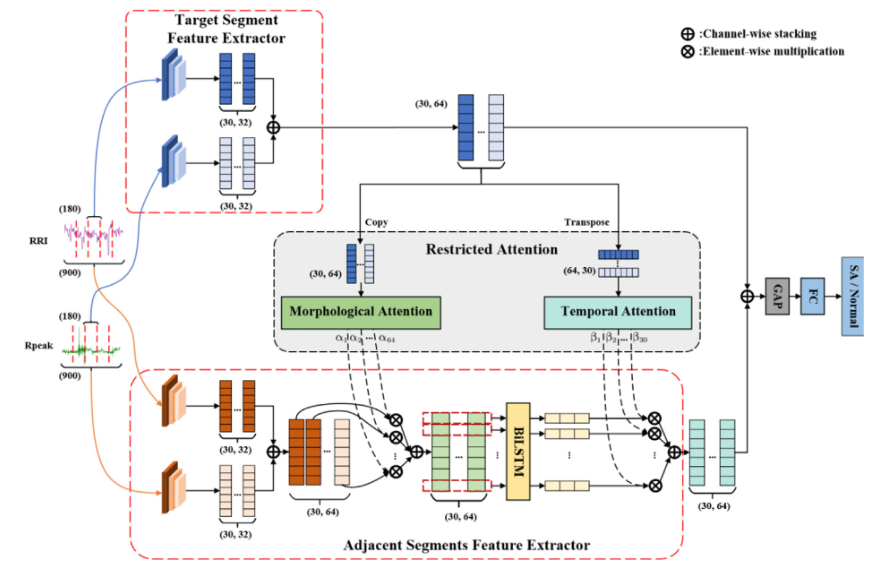

RAFNet: Restricted attention fusion network for sleep apnea detection

Ying Chen, Huijun Yue, Ruifeng Zou, Wenbin Lei, Wenjun Ma, Xiaomao Fan†(† corresponding author)

Neural Networks 2023 Journal

In this paper, we focus on SA detection with single lead ECG signals, which can be easily collected by a portable device. Under this context, we propose a restricted attention fusion network called RAFNet for sleep apnea detection.

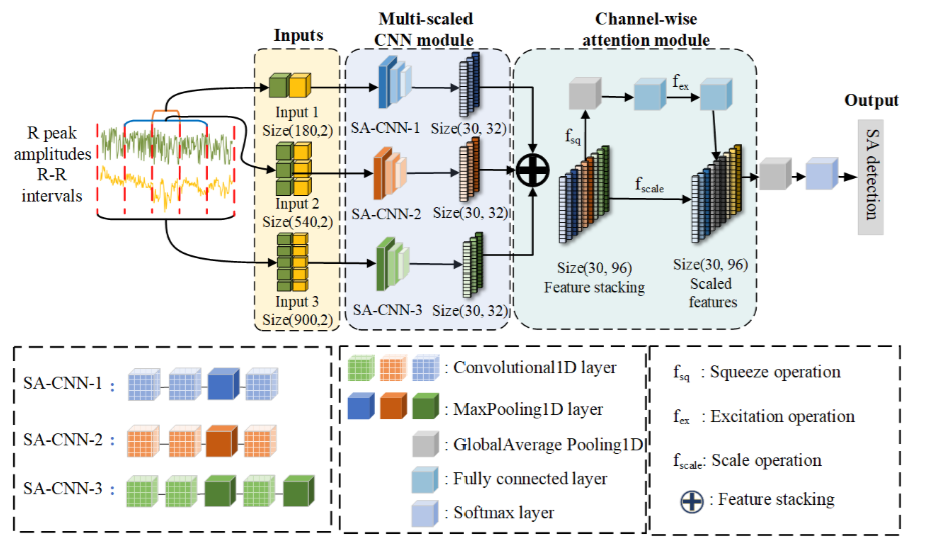

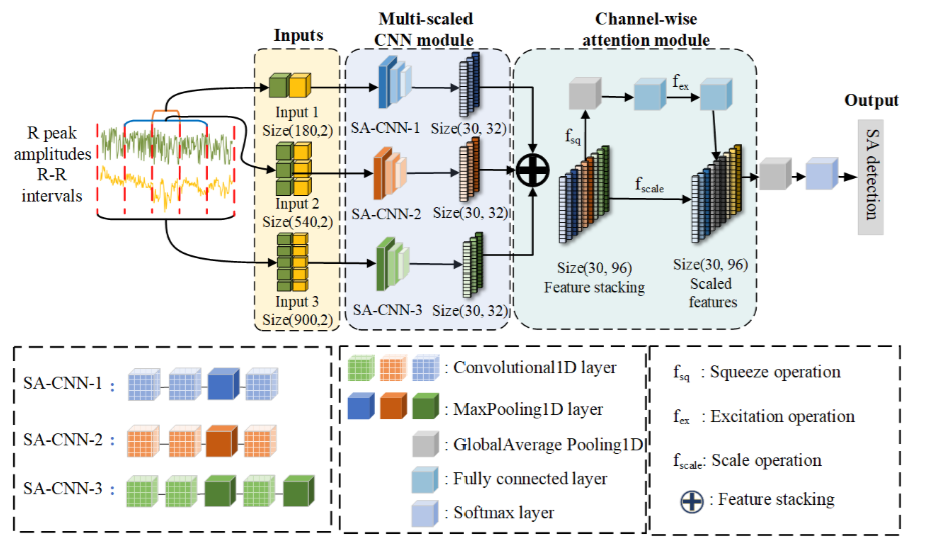

SE-MSCNN: A Lightweight Multi-scaled Fusion Network for Sleep Apnea Detection Using Single-Lead ECG Signals

Xianhui Chen*, Ying Chen*, Wenjun Ma, Xiaomao Fan†, Ye Li†(* co-first author; † corresponding author)

BIBM 2021 ConferenceOral

In this study, we propose a multi-scaled fusion network named SEMSCNN for SA detection based on single-lead ECG signals acquired from wearable devices.